Welcome to Flutebyte Technologies

The Model Context Protocol (MCP) is your USB-C for AI applications – unlocking data accessibility, tool integration, and unmatched flexibility. Yes, that’s a mouthful, but it captures the essence of what makes MCP so revolutionary in the AI space. If you’ve ever struggled with bridging artificial intelligence models to real-time data repositories, or if you’ve been searching for a frictionless way to integrate your AI systems into business workflows, keep reading. This blog delves into the nuts and bolts of MCP, its architecture, its benefits, and why it’s becoming the go-to standard for AI development across multiple domains.

Table of Contents

1. Introduction: The AI Landscape and the Birth of MCP

In this ever-evolving field of artificial intelligence (AI), large language models (LLMs) have proven capable of generating human-like text, handling complex queries, and even performing creative tasks. However, the utility of these models is often curbed by their isolation from timely, real-world data. Think about a scenario: you have a powerful LLM at your disposal, but it can’t tap into your company’s latest database entries or up-to-the-minute analytics. You’re left with a partial solution—an AI that’s bright and articulate but essentially living in a vacuum.

Enter the Model Context Protocol (MCP). Developed by Anthropic, MCP aims to break down this silo by providing a standardized framework for connecting LLMs to a variety of data sources and tools. The goal is to ensure that artificial intelligence not only understands words but also the ever-changing contexts behind them, gleaned from real-time information.

2. What is the Model Context Protocol (MCP)?

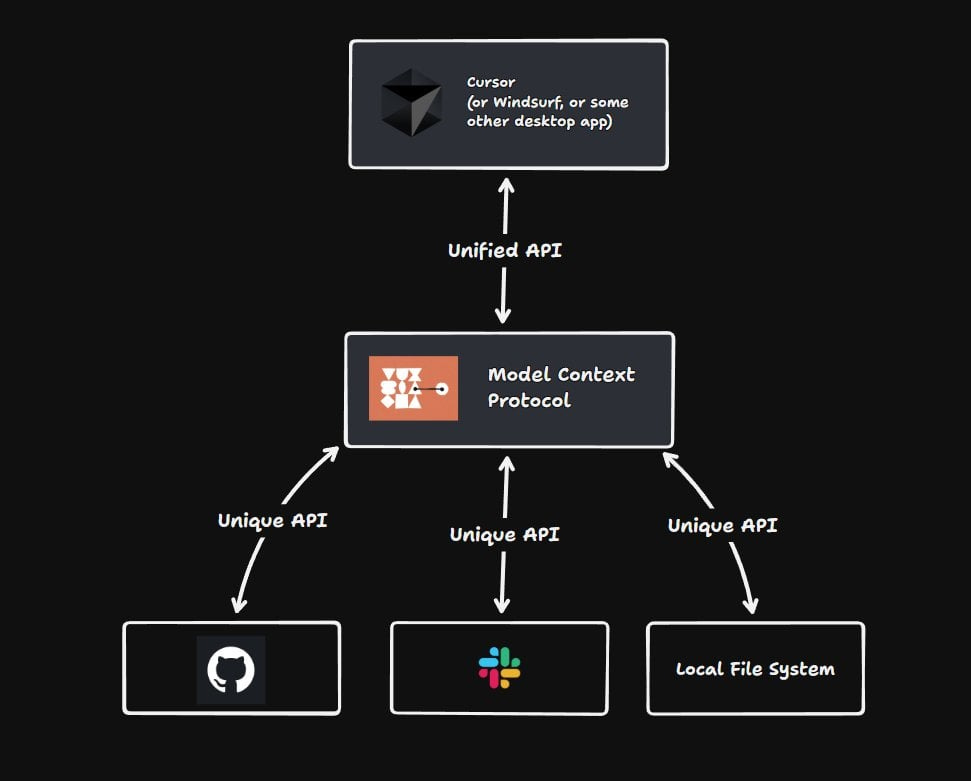

The Model Context Protocol (MCP) is an open standard designed to facilitate secure, two-way connections between AI systems (like GPT, Claude, Bard, or others) and external data repositories—whether that means local files, databases, or business tools in the cloud. Picture it as the “plug-and-play” approach to AI, analogous to the role USB-C played in the electronics industry. Instead of dealing with a mess of custom adapters and cables for each data source or vendor, MCP offers a single, universal interface.

By providing a common language for AI applications to communicate with data and services, MCP streamlines the integration process. Now, developers don’t have to write fresh code each time they want to integrate with a new tool or data source-MCP does the heavy lifting, allowing them to focus on business logic, user experience, and scaling.

Why is this significant? Because the time-to-market for new AI features is drastically reduced. Rather than spending hours or days building specialized connectors, teams can quickly integrate the necessary data streams using an existing MCP server or client. That’s speed, efficiency, and reliability.

3. The USB-C Analogy: Why It Makes Sense

We’ve likened MCP to USB-C, but what does that really mean?

- Universality: Much like how USB-C replaced a variety of cable standards (micro-USB, USB-A, etc.), MCP replaces a patchwork of specialized integrations with a single protocol.

- Ease of Use: With USB-C, you can plug in your phone, laptop, or peripheral without fiddling around with orientation or compatibility concerns. Similarly, with MCP, AI models can connect to various data sources through a standardized interface—no additional complexities involved.

- Scalability: USB-C supports data transfer, audio, video, and charging across devices of all shapes and sizes. MCP can facilitate interactions across local storage, databases, third-party APIs, or cloud services, depending on your AI’s needs.

- Vendor Independence: A universal connector ensures you aren’t locked into one brand or solution. MCP similarly ensures that you can switch AI providers or data services without massive refactoring.

By adopting MCP as the de facto standard, the AI community stands to benefit from universal compatibility, faster innovation cycles, and improved overall interoperability.

4. The Core Components of MCP

To understand how MCP works under the hood, let’s break down its fundamental building blocks:

- MCP Hosts

These are the host applications—things like Claude Desktop, an IDE, or even a chatbot front-end—that want to leverage data through MCP. Essentially, if an application needs AI features that pull data from somewhere else, it acts as an MCP Host. - MCP Clients

Integrated into the host applications, MCP Clients form a one-on-one connection with MCP Servers. Think of them as the “cables” that carry instructions from your application to an MCP Server. - MCP Servers

Lightweight programs that run locally or in the cloud to expose specific capabilities in a standardized MCP format. These servers can interface with local data sources (e.g., files on your computer), cloud services (like Slack, Salesforce), or external APIs, making them the hub of data interchange. - Local Data Sources

Everything from your laptop’s file system to an on-premises SQL database can be considered a local data source. MCP Servers can securely authenticate and retrieve the required data, bridging the gap between your AI model and your private data. - Remote Services

These are internet-facing APIs or external systems, such as Google Drive, GitHub, or Postgres hosting solutions. The real power of MCP is in how seamlessly it can connect your AI model to these services.

By understanding these components, developers can architect solutions that are not just flexible but also secure-a critical aspect in today’s compliance and data privacy environment.

5. Benefits of Implementing MCP in Your AI Workflow

5.1 Enhanced Data Accessibility

MCP enables AI models to retrieve real-time data, ensuring that the generated responses or analytical outputs are both timely and contextually relevant. Gone are the days of relying solely on static data sources or outdated information.

5.2 Standardized Integration

No more custom-coded connectors for each tool or data repository. With MCP’s universal standard, developers and organizations can drastically cut down on integration complexity, saving both time and resources.

5.3 Flexibility and Vendor Independence

Because MCP is an open standard, you’re not locked into a single AI model or vendor. Switch from Claude to GPT or any other LLM without rewriting your entire integration pipeline.

5.4 Security and Compliance

MCP was designed with enterprise needs in mind. By adhering to best practices and secure communication standards, MCP ensures that data remains protected as it moves between hosts, servers, and data sources. It also supports easy compliance checks, essential for organizations in regulated industries.

6. Practical Applications of MCP

The versatility of MCP has paved the way for a diverse range of use cases across various sectors:

- Enhanced AI Assistants

AI assistants can tap into multiple data sources (e.g., CRM systems, knowledge bases) for more accurate, context-aware responses. - Knowledge Management Systems

Internal documentation scattered across platforms? MCP can unify them, enabling advanced AI-powered search and retrieval. - Customer Support Chatbots

Offer personalized support by connecting to customer profiles, ticket histories, and product documentation in real time. - Content Creation Tools

Marketers and writers benefit from AI models that have access to an expansive range of data, making generated content both more relevant and more creative. - Software Development

Integrate AI into your IDE for code generation, auto-documentation, and debugging by pulling from Git repositories, Stack Overflow, or your own codebase. - Financial Analysis

Real-time access to market data, broker APIs, and portfolio analytics transforms your LLM into a powerful ally for investment insights. - Healthcare

Connect to medical databases or electronic health records, enabling AI-driven diagnostics and research assistance without compromising data security.

7. Getting Started with MCP

If you’re a developer eager to implement MCP, you’ll be delighted to discover the following resources:

- MCP Documentation

Anthropic and community contributors have put together thorough guides and tutorials on setting up and using MCP. From installation to advanced use cases, the official docs have you covered. - SDKs

Software Development Kits are available in multiple programming languages—Python, TypeScript, Java, Kotlin, C#, and more. These SDKs streamline the creation of MCP Clients and MCP Servers for your chosen ecosystem. - Example Implementations

A growing library of pre-built MCP Servers for enterprise systems such as Google Drive, Slack, GitHub, and Postgres. These examples offer a quick way to learn by examining real-world use cases.

8. Community and Collaboration

Because MCP is an open-source initiative, it thrives on user and developer contributions. If you believe in a more interconnected AI future, here’s how you can get involved:

- Participate in Discussions

Whether through forums, GitHub issues, or specialized channels, your questions, insights, and feedback help shape the future of MCP. - Contribute to Codebases

If you spot a bug or have a feature suggestion, dive into the codebase and submit a pull request. Open standards rely on collaborative efforts. - Share Implementations

Build and share custom MCP Servers for niche applications or advanced capabilities. The more the community expands its library of servers, the more robust MCP becomes.

9. MCP in Action: Real-World Scenarios

Let’s explore how MCP might function in a few actual scenarios:

Scenario 1: Retail Inventory Management

- Problem: A major retail chain needs to forecast product demand in real time.

- MCP’s Role: The retailer sets up an MCP Server that connects to internal sales databases, supply chain APIs, and even external market trend data providers.

- Outcome: An LLM integrated with the MCP Server can now pull real-time inventory stats, cross-reference them with incoming purchase orders, and even factor in external economic indicators—instantly generating restock recommendations and sales forecasts.

Scenario 2: Legal Document Analysis

- Problem: A legal firm wants to use an AI assistant to parse and summarize large sets of legal documents and case files, which are stored in a variety of places including cloud drives and local archives.

- MCP’s Role: Setting up an MCP Server that can interface with the firm’s internal document repository, plus any relevant external legal databases.

- Outcome: The AI assistant seamlessly retrieves documents, references them for relevant case law, and provides more accurate summaries and recommendations. Secure protocols ensure client confidentiality remains intact.

Scenario 3: Collaborative Healthcare Research

- Problem: A research hospital needs to combine patient data, lab results, and published research articles to assist doctors and researchers in making data-driven decisions.

- MCP’s Role: An MCP Server integrates with local patient databases (maintaining strict HIPAA compliance) and remote medical journals.

- Outcome: LLM-based analysis tools can fetch the latest research, correlate it with patient histories, and generate insights or treatment suggestions, all in a matter of minutes.

These scenarios underscore how MCP can revolutionize workflows and decision-making across diverse industries—from retail and legal to healthcare and beyond.

10. Overcoming Common MCP Adoption Challenges

No new standard is without its hurdles. Here are a few challenges you might encounter—and how to tackle them:

- Security Concerns

Organizations often hesitate to expose internal data to any external protocol. Emphasize that MCP is built with security as a core feature. Robust encryption, access controls, and a modular architecture allow granular permission settings. - Organizational Buy-In

While developers might be enthusiastic about MCP, upper management might be more cautious. Demonstrate ROI through quick-win use cases—like reducing the time spent coding integrations—so that leadership sees tangible benefits. - Learning Curve

Some teams might be intimidated by a new protocol. Comprehensive documentation, example repositories, and community forums can ease the learning curve. Encourage knowledge-sharing sessions where more experienced developers guide newcomers. - Vendor Lock-In Myths

Ironically, some might fear that adopting a standard like MCP results in vendor lock-in. Clarify that MCP is open-source, meaning it actively works against such lock-in by facilitating multi-vendor interoperability.

11. Future Developments: What’s Next for MCP?

The Model Context Protocol (MCP) continues to evolve. Its open-source community is exploring new avenues and features, such as:

- AI Model Customization: Fine-tuning the specific ways AI models handle requests from MCP Servers for domain-specific tasks.

- Extended Tool Support: As more companies adopt MCP, anticipate a surge in custom servers for specialized industries—think biotech labs, astronomy datasets, or even urban planning sensors.

- Increased Automation: Automations that handle routine tasks like data pre-processing, reformatting, or generating logs for compliance directly from the MCP pipeline.

Given how quickly AI and LLMs are advancing, MCP is poised to become even more integral—potentially serving as the backbone for next-gen enterprise solutions.

12. Final Thoughts: Why MCP Matters

The potential of large language models is immense, but harnessing it effectively requires more than just sophisticated algorithms. Real-world impact demands real-world data, and that’s where the Model Context Protocol (MCP) truly shines. It simplifies how AI systems connect to the lifeblood of modern applications—data—while maintaining security, flexibility, and vendor neutrality.

Repeat of Title in ~120 chars for SEO: The Model Context Protocol (MCP): A Universal Standard for AI-Driven, Real-Time Data Integration and Collaboration

By adopting MCP, you’re not only choosing a cutting-edge solution for AI integration but also joining a community that believes in openness, collaboration, and future-proofing. Whether you’re in healthcare, finance, software development, or any domain reliant on timely data insights, MCP offers a streamlined path toward AI-powered solutions that truly deliver.

13. How to Get Involved

- Visit the Official MCP Documentation: Start by exploring the official docs, which include step-by-step guides and advanced tips.

- Explore Example Repositories: Check out pre-built connectors for popular enterprise tools.

- Join Community Channels: Get your questions answered and contribute to lively discussions shaping the future of MCP.

- Build Your Own MCP Server: Experiment with local data sources or specialized services. Share your solutions with the community, and watch MCP evolve even further.

Wrapping Up

Whether you’re an AI enthusiast, a seasoned developer, or a decision-maker eyeing AI initiatives, the Model Context Protocol (MCP) offers a simplified, secure, and scalable way to bring your LLMs into the data-driven era. Think of it as the next logical step in bridging “what AI knows” and “what the world is actually doing in real time.”

With MCP, you’re not just integrating data—you’re weaving AI more deeply and effectively into the fabric of modern business, research, and technology. So take that next step. Start exploring, start building, and let MCP accelerate your journey into a truly intelligent future.

Frequently Asked Questions (FAQ) on Model Context Protocol (MCP)

1. What is the Model Context Protocol (MCP)?

Answer:

The Model Context Protocol (MCP) is an open standard designed to facilitate secure, two-way connections between AI models and external data sources or tools. Developed by Anthropic, MCP aims to simplify and standardize how large language models (LLMs) access real-time information from both local and remote services.

2. Why is MCP often compared to USB-C?

Answer:

MCP is likened to USB-C because it serves as a universal “plug-and-play” protocol for AI integrations. Similar to how USB-C replaced multiple cable standards, MCP eliminates the need for custom-coded solutions by offering a single, standardized interface for connecting AI models to various data sources and tools.

3. How does MCP enhance data accessibility for AI models?

Answer:

With MCP, AI models can securely fetch real-time data from multiple repositories—local file systems, databases, or cloud services—using a standardized interface. This removes the silo effect often seen in AI implementations where models are cut off from relevant or timely data.

4. What are the main components of MCP?

Answer:

- MCP Hosts – The applications (e.g., IDEs or AI assistant apps) that seek data.

- MCP Clients – Connectors within the host applications that communicate with MCP servers.

- MCP Servers – Lightweight programs that provide standardized access to data sources and tools.

- Local Data Sources – Files, databases, or services on a user’s system.

- Remote Services – Cloud-based or external APIs accessible over the internet.

5. What are some practical use cases for MCP?

Answer:

- Customer Support Chatbots: Access customer databases in real time for personalized assistance.

- Knowledge Management: AI models can search internal documentation or wikis effectively.

- Software Development: Integrate AI into coding environments for tasks like code generation and debugging.

- Financial Analysis: Pull real-time market data for investment advice or trading strategies.

- Healthcare: Retrieve patient records or medical research data with robust security in place.

6. Is MCP secure enough for enterprise environments?

Answer:

Yes. MCP adopts best practices around encryption and secure communications. Its modular architecture allows granular permission settings, ensuring data stays protected and compliant with organizational or industry-specific regulations.

7. How does MCP address vendor lock-in concerns?

Answer:

Because MCP is an open standard, it’s designed to work with any LLM provider or data source. This means you can switch AI providers or integrate new tools without major rewrites, significantly reducing the risk of vendor lock-in.

8. What resources are available for developers interested in MCP?

Answer:

- Official Documentation – Contains detailed guides and tutorials.

- SDKs – Available in multiple languages (e.g., Python, TypeScript, Java, Kotlin, C#).

- Example Implementations – Pre-built MCP servers for popular services like Slack, Google Drive, GitHub, or Postgres.

9. Where can I find the MCP community or get support?

Answer:

You can often find support through official documentation sites, GitHub repositories, and user forums dedicated to MCP. Engaging with these communities offers opportunities for collaboration, code contributions, and knowledge-sharing.

10. How do I get started with MCP today?

Answer:

Begin by reviewing the official MCP documentation and setting up a basic MCP Server for a data source you use frequently (e.g., a local database or a cloud service). Then integrate an MCP Client into your AI application or IDE, and start experimenting. As you gain familiarity, explore the broader ecosystem of pre-built MCP servers to expand your app’s capabilities.